AI INFERENCE AT EDGE.

Solve real world problems in real time.

Inference as code

Deploy AI anywhere, as simply as writing code.

SocketRun turns

inference infrastructure into clean, composable code.

No YAML sprawl. No manual scaling.

Just declarative, programmable inference that runs seamlessly from cloud to edge.

Code-first inference

Define and deploy your AI workloads in pure code — not config files.

Every model, runtime, and endpoint lives as versioned, testable source.

Write inference pipelines like functions.

Push to deploy.

Roll back or fork with Git-level control.

“If DevOps made infrastructure programmable, SocketRun makes inference programmable.”

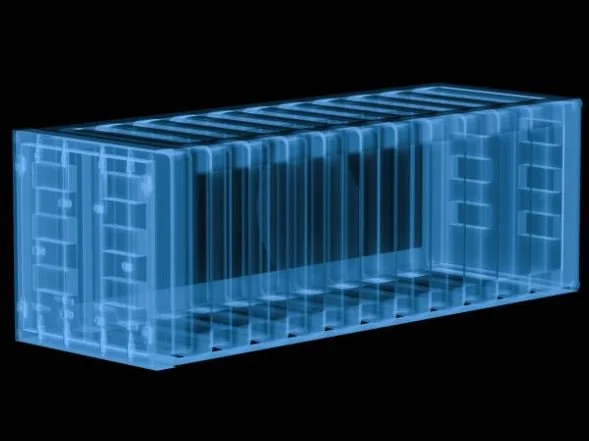

Run Anywhere

Your models, your hardware, your choice.

SocketRun detects available compute — GPU, CPU, or edge device — and automatically schedules inference across them.

Multi-node scheduling

Cloud-to-edge workload sync

Intelligent load balancing and failover

Unified Runtime

A lightweight runtime abstracts away environment setup.

Run local, hybrid, or distributed inference with the same codebase:

socketrun deploy my_model.py

socketrun test --edge node-2

Consistent, reproducible, and blazing fast.

Secure by Design

Encrypted model packaging

Role-based access to endpoints

Private registry support